Testing redux reducers - leveraging selectors

August 7, 2019

This post is part of a series, covering techniques for testing redux reducers. In the first installment of the series we saw that it’s better to test reducers and actions together as an integrated, cohesive unit. In this post we’ll take this idea a little further and see what happens when we pull selectors into our reducer testing strategy. Specifically, we’re going to see whether we can make our reducer unit tests even more expressive, by validating our expectations of redux state using selectors. We’ll also look briefly at the inverse - using reducers to make our selector unit tests more expressive.

In the third installment we’ll learn about writing Story Tests to validate the high-level behavior of both reducers and selectors.

Completing some todos

Let’s start by looking at an example reducer test to see whether we can improve it. As with the other posts in this series, we’ll be testing a reducer that manages a list of todos. Here, we’re testing how we track which todos are complete:

import reducer, {addTodo,completeTodo} from './todos';

describe('todos duck', () => {

describe('completing todos', () => {

it('initializes new todos as not completed', () => {

const todoId = 'todo-a';

const initialState = undefined;

const nextState = reducer(

initialState,

addTodo({id:todoId,text:'blah'})

);

expect(nextState).toEqual([

{

id: todoId,

text: 'blah',

completed: false

}

]);

});

it('allows us to mark an existing todo as completed', () => {

const todoId = 'todo-a';

let state = undefined;

state = reducer(

state,

addTodo({id:todoId,text:'blah'})

);

state = reducer(

state,

completeTodo({id:todoId})

);

expect(state).toEqual([

{

id: todoId,

text: 'blah',

completed: true

}

]);

});

});

});

These tests do the job and are pretty readable, although they’re a little verbose.

One concern is that a reader looking at the expectations at the end of these tests might need a moment to understand which part of the expectation is relevant to these specific tests. Is the value of text important here? The answer is no. In the context of this test we don’t care at all about that field. However, since we’re using toEqual to verify our expectations we have to specify the value of every field, even though we only actually care about the completed field. Put another way, our tests are over-specified.

Over-specified tests are fragile tests

These types of over-specified tests are not only distracting, they are also more fragile. We’re asserting specific expectations regarding every aspect of our state, which means that if we make any changes to that state shape we’ll have to touch a bunch of tests that aren’t relevant to the change. For instance, let’s say we decided to track creation time for each todo by adding a createdAt timestamp. This new field would break the over-specified expectations in these tests, and we’d have to come back and update each one.

There are a few options for resolving this. Instead of checking the entirety of the state, we could instead write multiple expectations which check the individual fields we care about - but that can get unwieldy pretty quickly. We could also look at using some more advanced features of Jest’s matchers:

import reducer, {addTodo,completeTodo} from './todos';

describe('todos duck', () => {

describe('completing todos', () => {

it('initializes new todos as not completed', () => {

const todoId = 'todo-a';

const initialState = undefined;

const nextState = reducer(

initialState,

addTodo({id:todoId,text:'blah'})

);

expect(nextState).toEqual([

expect.objectContaining({ // 👈 fancier expectatations

id: todoId,

completed: false

})

]);

});

it('allows us to mark an existing todo as completed', () => {

const todoId = 'todo-a';

let state = undefined;

state = reducer(

state,

addTodo({id:todoId,text:'blah'})

);

state = reducer(

state,

completeTodo({id:todoId})

);

expect(state).toEqual([

expect.objectContaining({ // 👈 fancier expectatations

id: todoId,

completed: true

})

]);

});

});

});

This is perhaps a little nicer. We are no longer distracted by an expectation verifying the value of the text field when that field is not relevant to the test at hand. More importantly, these tests are more resilient to unrelated changes - we wouldn’t need to touch these tests if we added that createdAt timestamp to our state, for example.

However, we’re also making our expectation statements more complex, and pulling in features of our testing framework that someone reading the code may well not be familiar with.

Moving to selectors

Let’s try taking a different tack. Conceptually, what we’re trying to do in these tests is verify the completed-ness of a todo after certain operations. If we were writing production code that wanted to look at the completed-ness of a todo, we wouldn’t go spelunking around in our redux state directly - we’d use a selector to extract that information. For example, here’s an isTodoCompleted selector:

export function isTodoCompleted(state,todoId){

const targetTodo = state.find( (todo) => todo.id === todoId );

return targetTodo.completed;

};

What would it look like if we used selectors like this in our test code, just like we would in our production code?

import reducer, {addTodo,completeTodo} from './todos';

import {isTodoCompleted} from './selectors';

describe('todos duck', () => {

describe('completing todos', () => {

it('initializes new todos as not completed', () => {

const todoId = 'todo-a';

const initialState = undefined;

const nextState = reducer(

initialState,

addTodo({id:todoId,text:'blah'})

);

// 👇 using a selector to query our state

expect(isTodoCompleted(nextState,todoId)).toBe(false);

});

it('allows us to mark an existing todo as completed', () => {

const todoId = 'todo-a';

let state = undefined;

state = reducer(

state,

addTodo({id:todoId,text:'blah'})

);

state = reducer(

state,

completeTodo({id:todoId})

);

// 👇 using a selector to query our state

expect(isTodoCompleted(state,todoId)).toBe(true);

});

});

});

Now we’re getting somewhere! These expectations are way easier to read, and more importantly they’re a lot more resilient to changes in our production code. If we change the shape of our state in production code we’d necessarily have to update any associated selectors, which means that tests which are using those selectors would get the update “for free”. No need to touch a single line of test code unless there’s a material change in the external behavior of our redux code. This applies even if we were to make dramatic changes to the internal implementation of behavior that’s under test. For example, we could change how we store the completed-ness of a todo - even moving that information to a totally different area of our redux state - without needing to touch a single test. This is a big win, and in my opinion the biggest motivator for moving to a more integrated approach for testing redux reducers.

Testing selectors

We’ve just been looking at using selectors to verify the state produced by a reducer, but we can also do the inverse - use a reducer to set up state which we then use to verify a selector’s behavior.

Here we have a new selector, getTodoCounts, which tells us how many todos we have that are complete vs incomplete:

export function getTodoCounts(state){

const completedTodos = state.filter( (todo) => todo.completed );

const total = state.length;

const complete = completedTodos.length;

const incomplete = total - complete;

return {

total,

complete,

incomplete

};

}

To test this selector we want to get our redux state into a shape that simulates having a few todos in our list, one of which is completed. We could create that simulated state “by hand” in our test, but this approach would have many of the same drawbacks that we saw when verifying state by hand in our reducer tests: it would be verbose, hard to read, and would make the test implementation pretty fragile - changes to the shape of our redux state would require changes to these tests. So, instead of hand-crafting this state, let’s set up the scenario using a reducer instead:

import reducer, {addTodo,completeTodo} from './todos';

import {getTodoCounts} from './selectors';

describe('todos selectors', () => {

it('correctly counts todos in various states', () => {

let state = undefined;

state = reducer( state, addTodo({id:'todo-a',text:'blah'}) );

state = reducer( state, addTodo({id:'todo-b',text:'blah'}) );

state = reducer( state, addTodo({id:'todo-c',text:'blah'}) );

state = reducer( state, completeTodo({id:'todo-b'}) );

expect(getTodoCounts(state)).toEqual({

total: 3,

complete: 1,

incomplete: 2

});

});

});

This should be fairly self-explanatory. Starting from an empty initial state, we add three todos, complete one, and then verify that the counts that come out of our getTodoCounts selector match what just happened. This style of selector test has the same benefits that we saw with our reducer tests - the tests are more expressive and way less coupled to the internals of how we model the todo list state.

Couldn’t we use snapshot testing?

Some folks might be wondering about Jest’s snapshot testing features as another way to approach verifying the state that a reducer operation creates. Here’s an example of how we could rework our reducer tests to use snapshots:

import reducer, {addTodo,completeTodo} from './todos';

describe('todos duck', () => {

describe('completing todos', () => {

it('initializes new todos as not completed', () => {

const todoId = 'todo-a';

const initialState = undefined;

const nextState = reducer(

initialState,

addTodo({id:todoId,text:'blah'})

);

expect(nextState).toMatchSnapshot();

});

it('allows us to mark an existing todo as completed', () => {

const todoId = 'todo-a';

let state = undefined;

state = reducer(

state,

addTodo({id:todoId,text:'blah'})

);

state = reducer(

state,

completeTodo({id:todoId})

);

expect(state).toMatchSnapshot();

});

});

});

Well, these tests are certainly succinct, but nevertheless I’m not a fan of this approach, for a two main reasons. Firstly, these tests no longer communicate expected behavior. Secondly, they are extremely brittle.

Looking at this test, can we tell what these addTodo and completeTodo actions are supposed to do to our redux state? We can guess based on the descriptions of the tests and the names of the actions themselves, but the test itself doesn’t communicate anything other than “it should work the same way it did last time”.

On the flip side, if anything at all changes in the shape of the state, these tests will fail. The tests are fragile in the same way that our original tests using toEqual were. And while it’s easy to re-snapshot the tests with jest --updateSnapshot, this is really just deodorant over the underlying problem - an over-specified test.

Drawbacks to using selectors

We’ve seen that when we use selectors in our reducer tests we get tests which are more expressive, and less brittle. But what are some of the drawbacks?

One concern is that as our tests pull in more production code they become less focused. If we get a failure from a reducer test which is using a selector, we don’t initially know whether the issue is with the reducer we’re testing, or the selectors that we’re using to help us test. We may have to do a little digging to figure that out.

This is an example of the more general set of tradeoffs you find along the spectrum between focused, isolated tests and broader, integrated tests:

| more isolated tests are… | more integrated tests are… |

|---|---|

| focused: the reason for a test failure is easier to locate | broader: a failing test could have several causes |

| artificial: tendancy to rely on test doubles, chance that these don’t reflect reality | realistic: real dependencies are used, tests are closer to production scenarios |

| brittle: changes in a dependency’s code are more likely to require test changes | supple: changes to dependencies are less likely to require changes to test code |

Given these tradeoffs, the best option is to create a blended test portfolio, where the benefits of each style of testing compensates for the drawbacks of the other styles.

Moving beyond ducks

At the start of this article I mentioned another post of mine which argues for including redux actions in our reducer tests, and shows how that revealed the shape of a larger organizational unit in our redux code - the duck. In that post I point out how action creators form a sort of public interface for the reducer, and that we should be testing the duck as an integrated unit.

We can now take that argument a step further. From our exploration of testing strategies, it seems clear that selectors form the missing second half of a duck’s public interface.

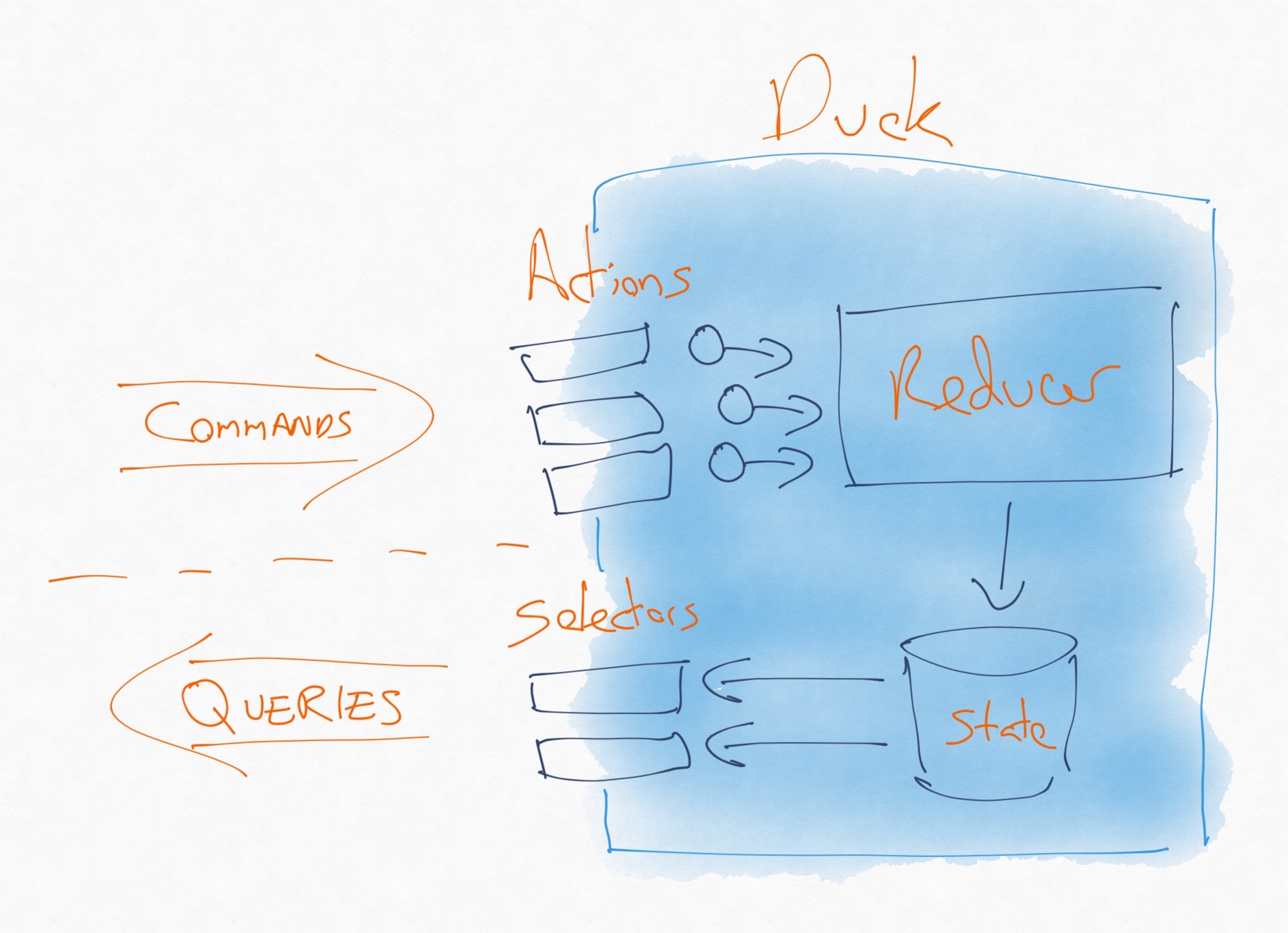

A duck module, where selectors provide a public query interface and actions a public command interface

This structure is quite remaninscent of a CQRS (Command Query Responsibility Segregation) architecture. Selectors are Queries, used to inspect the current state of our application, while Actions are Commands, used to effect changes to that state1.

While the original proposal for the duck module doesn’t mention selectors, I believe it makes sense to expand the scope of the duck module to also include selectors. I’m not the first to point this out.

Summarizing our options

In this article we’ve used four different approaches to validating expecations of redux state.

We used Jest’s toEqual matcher to do exact equality matching:

expect(state).toEqual([

{

id: todoId,

text: 'blah',

completed: true

}

]);

We used Jest’s objectContaining matcher for more flexible, partial matching:

expect(state).toEqual([

expect.objectContaining({

id: todoId,

completed: true

})

]);

We used selectors:

expect(isTodoCompleted(state,todoId)).toBe(true);

And finally we tried out Jest’s snapshot testing facility:

expect(state).toMatchSnapshot();

Looking at these options side-by-side, it’s even clearer that selectors are the most expressive option. We’ve also seen that selectors keeping our tests more flexible, and resilient to production code refactoring.

Next time you’re writing a reducer test, I hope you’ve consider using selectors to validate redux state. And let me know how it works out!

- I find it quite interesting that redux, which hews closely to a functional programming style, ends up modelling Command/Query Seperation, which comes very much from an Object-Oriented perspective of software. My friend and former colleague Andrew Kiellor pointed this out to me. [return]