Leading your engineers towards an AI-assisted future

June 26, 2025

“Listen, I’m wondering if our engineers are doing enough with AI…” your CEO says, as the two of you sit down for your 1:1.

“OK…”, you reply.

“I was chatting to a startup buddy of mine over the weekend, and she said that 50% of their code is written by AI now!”

“Well,” you stammer, “I’m not sure whether those kind of metrics are actually that helpful…”

“Look”, your boss interjects, “I know there’s a lot of hype around this AI stuff, but it can’t be entirely without merit, right? I’m just worried that we’re falling behind the competition here.”

Sound familiar? Sound scary? Brave engineering leader, if you haven’t had a version of this discussion yet, it’s probably coming soon. Wouldn’t it be great to be at least one step ahead of this conversation?

In this post we’ll build out a strategy for adopting AI-assisted engineering - something that will drive meaningful improvements for your engineers and give your boss confidence that you have everything in hand during this tumultuous period.

Here’s how you’ll stay ahead: lead with experimentation, communicate clear direction using metrics, and provide organizational support along the way. Let’s walk through what that looks like.

Overall approach

Generative AI is a hugely impactful and genuinely novel technology. However, when it comes to driving adoption within an organization, we can lean on the same proven methodologies that supported previous waves of improvement (devops, test automation, observability, microservices, cloud computing, continuous delivery, etc.)

Our enablement approach will center around the concept of Aligned Autonomy. We’ll set our teams a clear initial goal - meaningful experimentation with AI-assisted coding - and define objective measures which track progress towards that goal. We’ll empower our teams with autonomy around exactly how they achieve this objective, while also providing a lot of organizational support along the way.

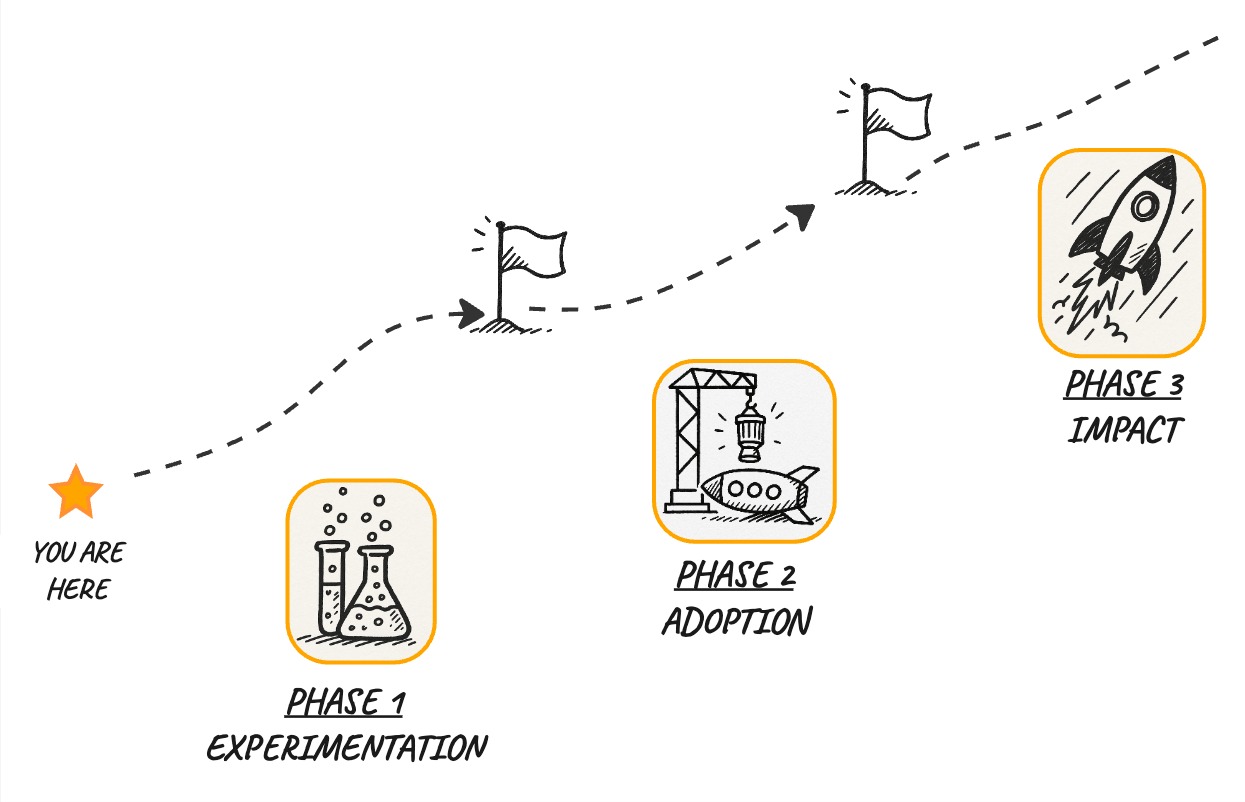

That objective I described - meaningful Experimentation - is the first phase of our journey. We’ll then transition to a second phase centered around a goal of Adoption of AI as standard part of the day-to-day toolkit for engineers. At that point the focus shifts again to measuring Impact - where is this new tool helping, and where is it causing issues.

As we move through AI adoption milestones our objectives change: from Experimentation to Adoption to Impact

Our teams will have autonomy on how to achieve the goals we’ve defined, but that doesn’t mean they should go it alone. We’ll set up organizational support along the way - providing training, making sure everyone has access to whatever tools and services they need, and establishing peer support via a Community of Practice.

But before we talk about organizational support, let’s dig into the motivation behind those phases of adoption.

Finding the Middle Path

Why should we start our AI adoption journey with an Experimentation phase? If our intention is to adopt AI-assisted coding, why shouldn’t we just start by establishing some metrics around AI-coding adoption and tell our teams to hop to it?

This capability for AI to write code is truly a tectonic shift in our industry, and it’s happening really fast. In my 20+ years in the industry I haven’t seen anything like it. There are an awful lot of overblown pronouncements about what AI can do, but at the same time the capabilities of AI coding systems are truly impressive, and improving fast. There’s clear potential for this technology to make at least part of an engineer’s day-to-day work obsolete, which of course makes the adoption of AI an emotionally fraught endeavor.

When you talk to engineers within your own org, you’re probably seeing some extremes of opinion. I see some engineers who are “all in” on AI, convinced that it will be doing all the coding within a year or two. Some of the engineers are already ceding way too much autonomy to AI, submitting large AI-authored pull requests after only a cursory review.

I believe the term-of-art is "vibe-coding".

It’s natural that some other engineers will be skeptical and defensive. If they’re also on the receiving end of those AI-generated PRs they’re even more likely to see themselves as brave guardians of a codebase’s internal quality, holding the line against the onslaught of sloppy AI software design.

A seasoned engineer, defending code quality

Your goal as an engineering leader is to guide your engineers to a middle path. On the one hand, coding agents are really useful for certain tasks today, and every engineer should be familiar with their capabilities and comfortable reaching for AI as a tool in the tool box. On the other hand, AI is not currently capable of writing well-designed software without a human-in-the-loop. Engineers need to learn how to compensate for the limitations of these magical genies, and resist the siren call of sloppy software design.

AI coding can provide mechanical advantage, while keeping human decision-making in the center

I believe that the best way to move your own engineering org towards this middle path is by encouraging engineers to meaningfully engage with this technology, in order to collectively understand both its capabilities, and its limitations.

That’s why we start with an Experimentation phase. We are intentionally NOT asking engineers to meet a target of using AI for x% of their PRs. We’re just asking engineers to spend time with this amazing and scary technology, so that we can collectively figure out the right way to wield it.

Additionally, this technology is evolving FAST. The tools and techniques that are state-of-the-art today will very likely be old hat within a year or two. To keep up, our engineering organizations need to establish a strong culture of ongoing experimentation and knowledge sharing. That foundation is what we’ll be building first.

Driving Experimentation and Adoption

We understand our initial goal: experimentation in AI-assisted coding across the organization. We want each team to develop a familiarity with AI’s capabilities and limitations - to figure out where it works for them and where it doesn’t - and share what they’re learning with other teams.

Now, how do we actually make that happen?

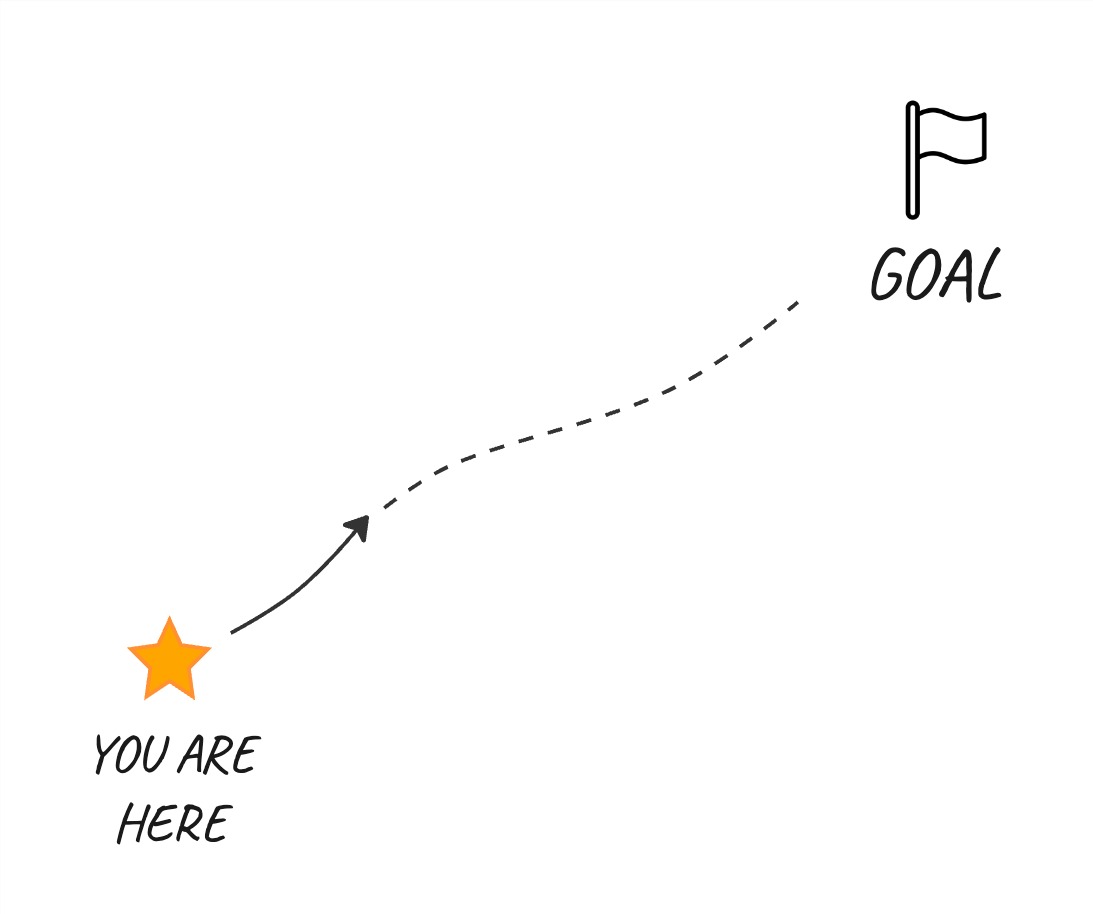

For any such organizational change we can guide teams towards a goal by establishing KPIs which clearly communicate current state and target state - where we are and where we want to get to.

KPIs can objectively illuminate both where we are today, and where we want to get to.

Note that, while KPIs (or metrics or measures or data-driven decisions or whatever you call them) are a useful tool, engineers have a natural skepticism; we should be careful to avoid turning into a pointy-haired boss. I’ll touch on this a bit more in the Organizational Support section.

Metrics should be a useful tool for your team, and not invoke this image.

We’ll want to start by defining metrics for our initial Experimentation phase. At this point we simply want to measure whether teams are making a meaningful investment in trialing AI-assisted coding, and sharing what they learn. Some basic metrics would be:

- number of tools piloted by each team, categorized by type

- has the team done an AI tool retro in the last month

- how much activity is there in the

#ai-codersslack channel - what is attendance like for Community of Practice meetings (see the Support section below for more on establishing a Community of Practice)

We’re really just looking for some indication that teams are making an effort to try these new tools out.

The next behavior change we’d be looking to measure is actual Adoption of AI tooling. Each organization will of course have nuances in what they want to measure, but at a minimum I’d be looking to track:

- Usage of tools - which teams are using which tools for which tasks (code completion, agentic coding, code review, UX prototyping, etc). This is the primary metric we’re looking to move.

- Sentiment - Does AI make you more productive?

Eventually we will want to start measuring Impact - what does AI assistance do in terms of productivity, quality, agility, etc. In my opinion the best approach here is to use the same sort of general metrics you’d use for engineering productivity - things like DORA, SPACE, etc. If you’re also measuring AI adoption for teams then you can combine these two sets of metrics to figure out whether AI adoption affects things like Change Failure Rate, Lead Time, MTTR, etc.

If your organization is already measuring engineering productivity metrics - either via a product (Multitudes, LinearB, DX, Jellyfish, etc.) or via an internal platform - then you’ll likely want to leverage that for measuring these AI metrics too. But, don’t feel that you need to set up a whole fancy system just for this - a simple Google Form survey sent out to staff every 2 weeks is a great start.

Using data to communicate expectations

With initial metrics identified, you can now communicate a clear target for your teams, starting with those Experimentation metrics. Something along the lines of:

“by the end of the month we expect all teams to have piloted at least two tools in each of these categories, held an internal retro, and identified next steps for experimentation”.

As teams progress beyond experimentation into adoption, their target can shift to focus more on Adoption metrics.

The intention here is to define a clear target for your teams, with objective feedback on their progress, while still giving each team autonomy on how to achieve that goal. That’s what Mission Command looks like:

“Here’s where you are today according to the most recent AI adoption survey. Here’s how those metrics compare to other teams, and here’s how it compares to our goal for the end of the quarter.

What are your plans for achieving that goal, and what can the organization do to support you?”

Supporting AI adoption

That last part is really important - beyond setting expectations for your teams, you also need to provide support along the way.

Let’s talk about the different ways you should be supporting the adoption of AI-assisted engineering.

Suggest some basic program structure

Beyond providing a metrics-driven Mission Command structure, I think it’s valuable to provide some lightly-prescriptive guidance, particularly at the start of this sort of enablement program. For example, you could suggest that all teams start off with the following:

- a kickoff session to discuss the program, share goals, brainstorm ideas

- scheduled informal lunch-and-learns for people to share what they’re doing

- a team retro after two weeks to discuss what’s working and what’s not with respect to AI adoption

It’s OK if teams opt for a different approach to achieve their target - that’s the autonomy part of Aligned Autonomy - but it’s good to give them a nudge in the right direction.

Training

Using AI in a productive way means learning a new skill set; you should consider a training program to accelerate development of those skills.

Here’s a general outline for training a product engineering team - unsurprisingly, this is the structure for the live workshops that I offer!:

1. LLM 101

- How LLMs work

- Where do LLMs get their inputs (training set + context window)

- chat sessions under the hood - LLMs have no inherent memory

- overcoming these limitations (tools, prompt engineering, RAG)

2. How AI can help product delivery teams

Walk through the major categories/use-cases for AI assistance; what they do, what they’re good for

- auto-complete (e.g. copilot, cursor, windsurf)

- agentic coding (copilot in agent mode, cursor, windsurf, claude code, ampcode)

- async agentic coding (devin, codex, github copilot coding agent)

- vibe-coding throw-away prototypes (v0)

- vibe-coding internal tools (v0, lovable, etc)

- code review (and why it DOES NOT replace human code review)

- technical design (deep research)

3. Agentic coding deep dive

What AI coding agents are, why they’re such a leap forward, and how to use them effectively.

- building your own coding agent

- memory/rules

- managing context

- Context-Constraint framework

- chain-of-vibes

- tools/MCP

Bonus: Office Hours

Ongoing sessions where engineers can bring challenges they’ve had in using AI, and get advice from others

With such a fast-moving landscape this training program is likely to evolve, but the basic structure is to provide a grounding in how the magic works, introduce the different tools, and then spend a good amount of time understanding the specific (often unintuitive!) techniques that let you get the most out of AI’s jagged intelligence.

Remove organizational impediments

Part of your job as a leader is to clear organizational impediments. When it comes to AI tooling the two most common impediments are budget and compliance/legal concerns.

I’m surprised by how often engineers talk about the cost of AI coding, expressing concern that tool X costs $200 a month more than tool Y. Given the fully loaded cost of an engineer and the boost they can get from AI assistance, $200 a month is really a marginal cost. Communicate this logic clearly to your engineers, and make sure they have whatever budget approvals they need.

When it comes to legal/compliance concerns you should be going to bat on behalf of your teams, getting clearance for at least a “pilot program” level of access to the latest tools. A lot of these tools support self-hosted or dedicated-tenant deployment models if required, and at this point all the major vendors are very experienced at selling into risk-sensitive enterprises and will be more than happy to support you!

You know you’re doing a good job here if an engineer is able to start experimenting with one of the more popular AI coding tools without having to incur multiple days of administrative form-filling.

Providing time

I’m guessing your engineering teams don’t just happen to have spare time to spend experimenting with cool new technology. Every team I’ve ever worked with is working right at the limits of their capacity.

With this new enablement program you’re asking your engineers to spend a good amount of time and attention on something, and it’s up to you to make sure they have the capacity to do so. That means working with peers within your leadership team to carve out space in your product roadmap. It wouldn’t hurt to ask for a bit of executive cheerleading for this experimentation program while you’re at it.

Don’t forget to also make it clear to your teams that they have support to spend time on this work - it’s easy for some teams to get stuck in the status quo of feature delivery and bug fixing unless you explicitly tell them to make time for other things.

Bootstrapping a Community of Practice

Strong systems for internal peer support - aka a Community of Practice - can provide an outsized boost in the adoption of a new thing. You’ll want to establish some support infrastructure for an AI coding CoP (or possibly a slightly wider scope).

While these communities should primarily be grass-roots and centered on practitioners, as a leader you should plan to provide some initial support. Assign someone clear accountability to bootstrap your CoP. They should be establishing initial set of meetings and comms touch-points, and cheer-leading participation. That initial CoP infrastructure might include:

- an

#ai-codersslack group (or mailing list, IRC channel, etc.) - a weekly informal “AI Coffee” meeting

- a bi-weekly lunch-and-learn where people can informally present what they’ve been doing, or get together and watch videos

- a “what’s happening with AI coding” newsletter (or all-hands update)

- a book club

- an outside lecture series

Leading with Empathy

As we’ve already touched on a couple of times, AI-assisted engineering is a huge shift in our industry. Some people are legitimately concerned that it will take their jobs, or at the very least will take away the most rewarding part of their jobs (the coding part).

A vital aspect of your role as a leader is empathy. Understand that some engineers will have this concern, and don’t diminish it but rather address it head-on. Talk about it in all-hands type meetings, but also make yourself available for smaller group or individual conversations.

I personally believe it’s unavoidable that AI will have a permanent impact on how we do our work as engineers, but I don’t see it as a negative. Firstly, I think that the work that will be automated will primarily be the tedious parts of our job - removing a feature flag, remembering the details of an API, upgrading a library, centering a div in CSS. Secondly, Jevons’ Paradox will kick in - in fact I think it already is. Even though AI is making software much cheaper to create, there is just so much useful software that’s waiting to be written that it’s going to keep us software developers in work for the foreseeable, even if AI is doing the tedious typing part.

Speaking of fears and concerns, you should also make sure that the metrics you’re using to guide your adoption journey aren’t misinterpreted or misused. Standard rules apply: make it clear to teams that the measures are primarily a tool for them to use to gauge their own progress. Avoid drilling down into a specific person’s numbers. Don’t share granular metrics with leadership.

Communicating progress

We’ve talked about measuring current state, defining a target for future state, and supporting teams along the way. We also need to help teams understand how they’re progressing, and report the progress overall to folks outside of the engineering org.

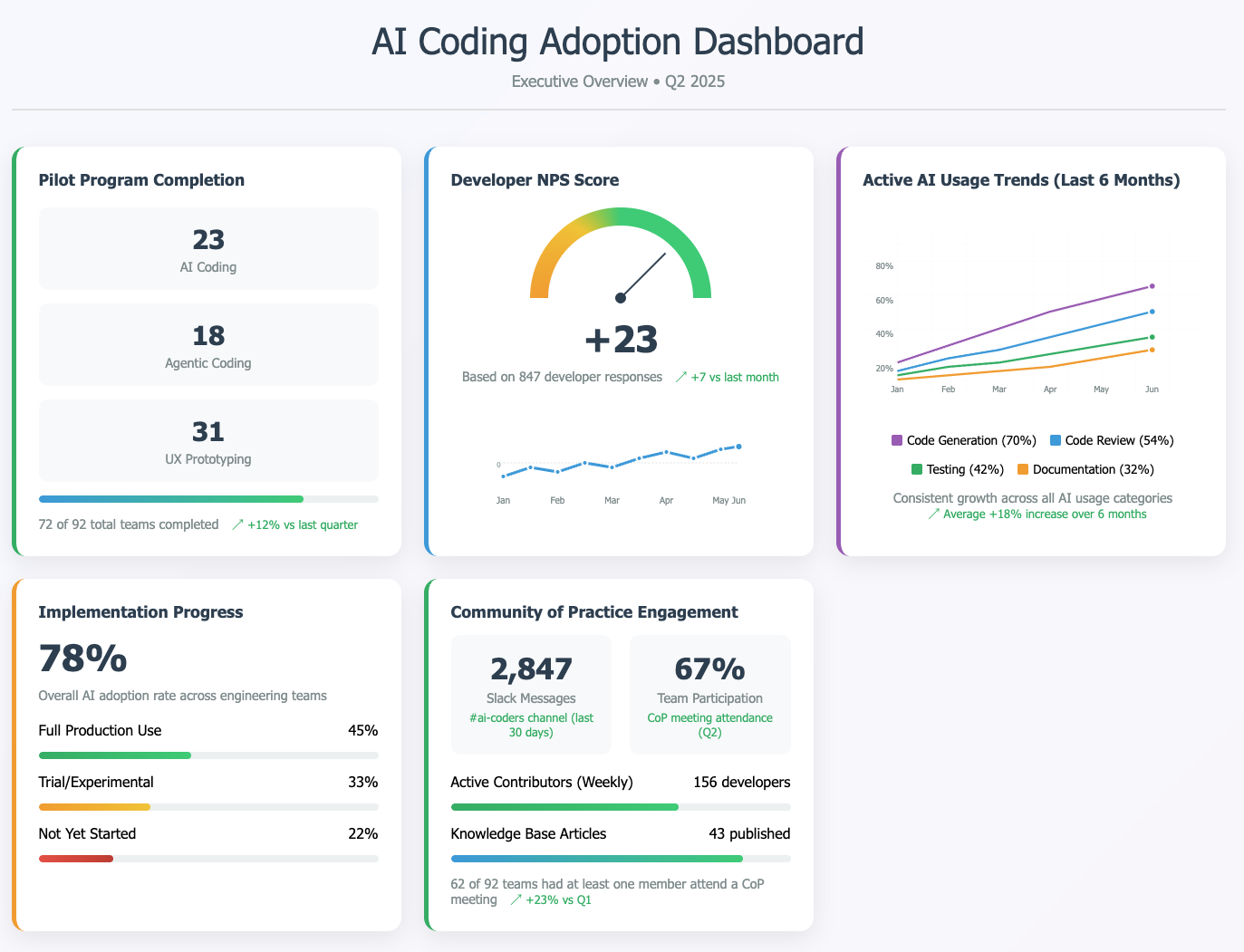

This doesn’t have to be complicated. A simple dashboard that captures trends over time can be enough to report overall progress.

A mocked-up exec dashboard, courtesy of Claude

For your managers within the engineering org you’ll probably want a more detailed report that shows them the detailed metrics for their teams specifically. You’ll want that report to help them compare their team’s progress against the average, and against target state - the report should be the starting point for a status update conversation.

Conclusion

While generative AI is very new, adopting AI-assisted development doesn’t require reinventing the organizational change playbook. By applying proven enablement strategies - clear metrics, aligned autonomy, and management support - you can guide your teams through this transformation thoughtfully and effectively, and communicate concrete progress to the rest of the organization (and your boss!)

The key is to start with experimentation rather than jumping straight to productivity metrics. Give your teams the autonomy to discover what works for them, while providing the training, tools, and support they need to succeed. Your goal is to help your engineering org find that middle path, where AI is doing some of the tedious heavy lifting, but not building up slop in your codebases.

I can help YOU on YOUR journey

If you made it this far then hopefully you’ve gotten the sense that I have some experience helping engineering teams adopt new ways of working. I’ve also spent a lot of time figuring out the right ways to use AI for coding.

Helping engineering teams grow is what I do - if you’re looking for some support on your AI adoption adventures, get in touch!

Acknowledgements

Many thanks to Justin Abrahms and Roger Marley for reviewing an early draft and offering valuable feedback and suggestions.